Recently, lawmakers on Capitol Hill held a hearing with the CEOs of the world’s largest social media companies. The intense grilling lasted more than five hours and covered a wide range of issues, from misinformation and extremism to coronavirus and climate change. Lawmakers asked the heads of Google, Facebook and Twitter pointed questions, including whether they felt their platforms were responsible for the January 6 attack on The Capitol in Washington, D.C. (only Twitter’s Jack Dorsey agreed). But much of the session was focused on potential regulations on social media companies to protect users from harmful content, including all new legislation or reforms to Section 230 of the Communications Decency Act, which shields social media platforms from lawsuits tied to content their users post.

As social media figureheads and lawmakers grapple with potential regulations stemming from concerns over the platforms’ ability to police the content shared on their sites, Escalent wanted to find out what the people think. Following the January 6 attack, we conducted a study among American citizens of all ages, genders, geographies and political views to dig into these issues to provide a better picture for social media platforms. Specifically, we wanted to know whether people agree on social media’s ability to police themselves when their members promote or glorify violence, and, more importantly, what to do about it.

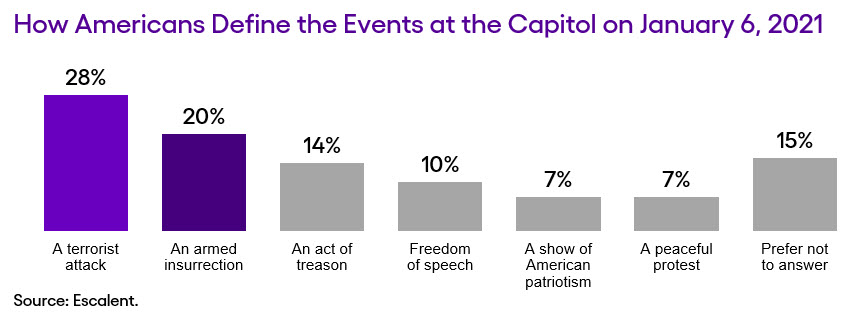

First, we needed to understand how our respondents felt about that fateful day in January. While the responses were somewhat mixed, nearly half categorized the day’s events as inherently violent, with another 14% calling the acts treasonous. Conversely, 24% felt the day was marked by expressions of “freedom of speech,” “a show of patriotism” and “a peaceful protest.”

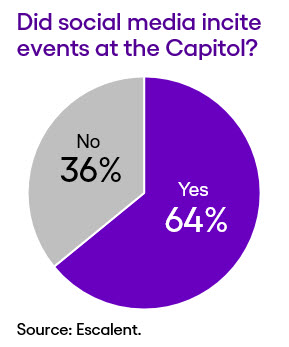

Digging deeper into the relationship between the attack and the role of social media, nearly two-thirds of our respondents felt social media did, in fact, play a role in inciting violence for that particular event. Not only did our respondents feel social media played a role, but 75% also feel social media companies should intervene when a user’s content promotes or glorifies violence.

While there’s broad agreement on the need to address such content, what is less clear is just how social media companies should be responding. Teasing out the nuances of what people believe and finding common ground will be critical to setting terms and regulations to effectively protect and manage these platforms going forward. And, while there are varying degrees of agreement and disagreement between certain ages, demographics and political affiliation, there is one area that we can see in our data that is relevant for all: freedom of speech. For social media companies looking to avoid government regulations and win the popular vote, the key is to find the common threads to unite the public and lawmakers in the name of that sacred freedom.

Understanding the Stakes

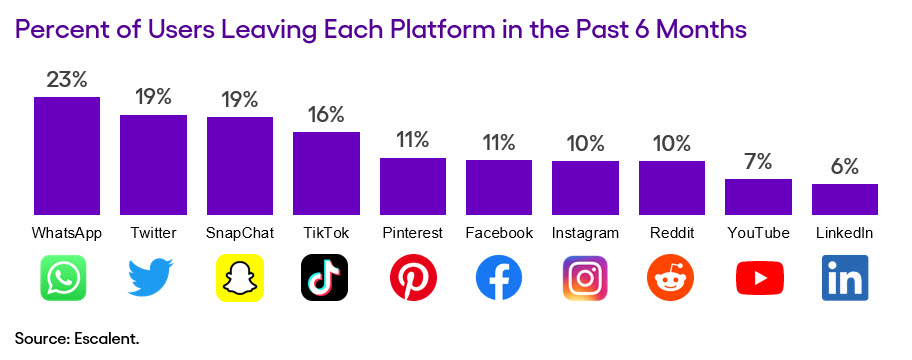

Like publishers and audiences, the value of social media networks is typically derived from their user bases—the size, scope, diversity, frequency of use, amount consumed and more. And, while it’s hard to imagine a widespread boycott of social communities which have so fully permeated the digital experience, some respondents told us they’ve already taken that step. A surprising number said they’ve stopped using a network they were active on within the past six months.

Ultimately, social media companies will have to come to grips with the fact that perceived inability or unwillingness to self-police controversial content may impact their bottom line. To that end, perhaps working with legislators to show a genuine effort to curb such activity will strengthen their brands and customer loyalty—and, in turn, revenue.

Want to know more? Download our report, How Silicon Valley Can Win the Social Media Cold War, to get more specifics on how social media platforms should police their platforms, the impact of current conspiracy theories on popular opinion and what social media companies should do to ensure a seat at the table with lawmakers.